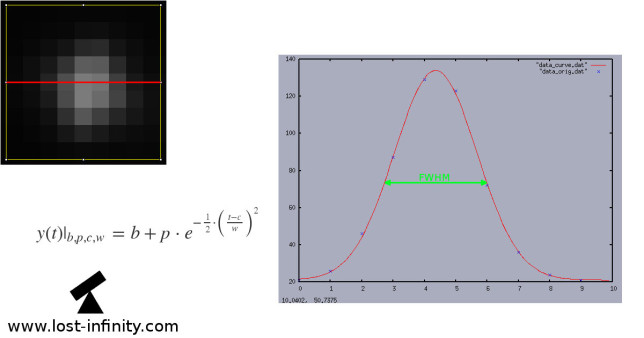

In Part 5 of my “Night sky image processing” Series I wrote about measuring the FWHM value of a star using curve fitting. Another measure for the star focus is the Half Flux Diameter (HFD). It was invented by Larry Weber and Steve Brady. The main two arguments for using the HFD is robustness and less computational effort compared to the FWHM approach.

There is another article about the HFD available here. Another short definition of the HFD I found here. The original paper from Larry Weber and Steve Bradley is available here.

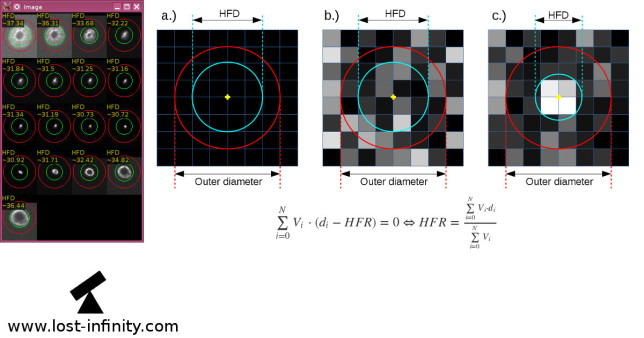

Definition of the HFD?

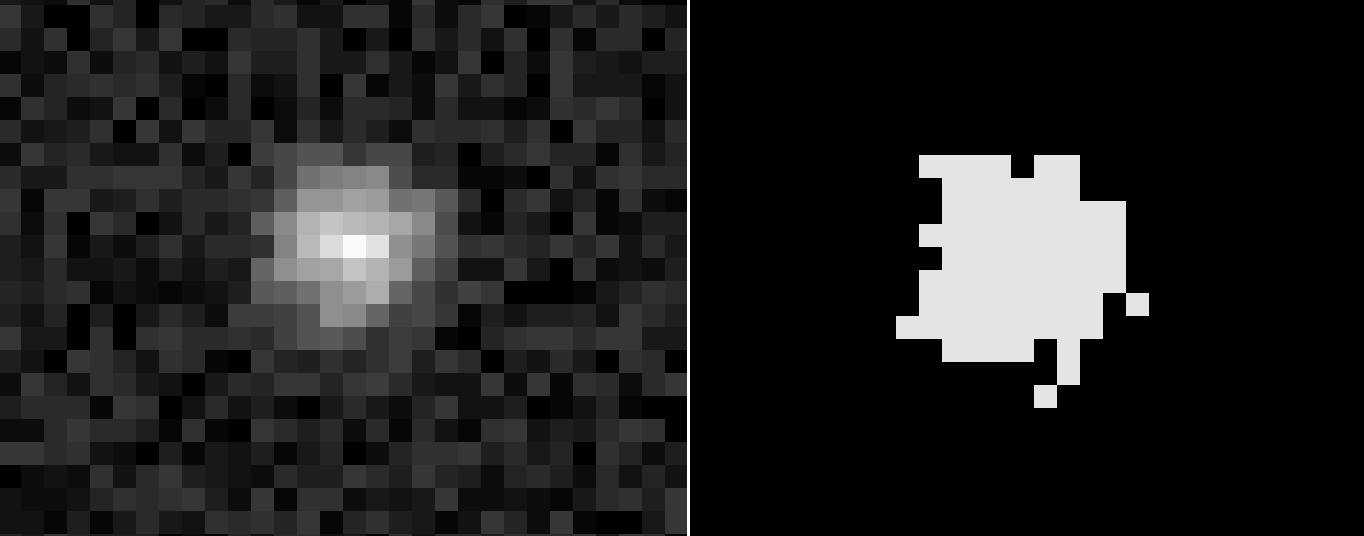

Let’s start with the definition: “The HFD is defined as the diameter of a circle that is centered on the unfocused star image in which half of the total star flux is inside the circle and half is outside.”

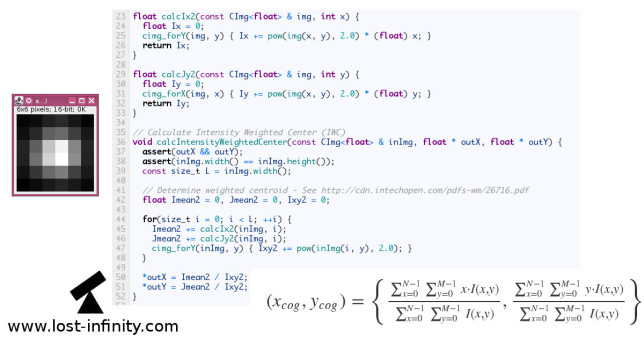

In a mathematical fashion this looks like this:

$$\sum\limits_{i=0}^{N} V_i \cdot (d_i – HFR) = 0 \Leftrightarrow HFR = \frac{\sum\limits_{i=0}^{N} V_i \cdot d_i}{\sum\limits_{i=0}^{N} V_i}$$

where:

- $V_i$ is the pixel value minus the mean background value (!)

- $d_i$ is the distance from the centroid to each pixel

- $N$ is the number of pixels in the outer circle

- $HFR$ is the Half Flux Radius for which the sum becomes $0$