In this article I shown a C++ implementation of the “max entropy” threshold algorithm using the CImg library. This implementation also performs for 16 and 32 bit / float images.

First, a little bit of context: Some time ago I implemented the OTSU threshold algorithm as a pre-processing step for image binarization. I used that threshold algorithm to distinguish the noise pixels from the potential “star” pixels. This worked quite well for the high contrast input images at that time. However, for weak stars it unfortunately failed badly.

The Max Entropy Threshold Algorithm

So I needed a threshold function which was able to address this problem! Again, I came across ImageJ. ImageJ has a list of many different threshold algorithms which you can directly apply to your image. This way I found the max entropy threshold algorithm which turned out to work perfectly for the old and the new input images.

The algorithm was first proposed by Karpur J.N., Sahoo P.K. and Wong A.K.C. (1985): “A New Method for Gray-Level Picture Thresholding Using the Entropy of the Histogram”. In June 2007 this algorithm was ported to the ImageJ library by G. Landini.

Dealing with the histogram

I found an implementation of this algorithm on the web. I translated this algorithm to a simple C++ program and… it turned out that it is terribly slow! It was easy to find out why: The max entropy threshold algorithm works with histograms. For my tests I was using a 16 bit image. A 16 bit image can have $2^{16}=65536$ different brightness levels i.e. the corresponding histogram will have 65536 different entries. The bigger the histogram, the higher the runtime of the max entropy algorithm – more precisely the order of execution is more or less $O(n^2)$ with $n$ as histogram size.

However, running the ImageJ Java implementation on my 16 bit image worked perfectly well – even for float images. In fact there was no difference in the runtime between images with different bit depths. So I had a closer look to the code. It turned out that they do a simple “trick”. The histogram is being “crushed” before the actual max entropy algorithm runs. Then, finally, the calculated threshold value needs to be transformed back, so that it fits to the original input image.

This was just not obvious from the code snippet. The ImageJ plugin code uses additional histogram classes which do the job under the hood. That did not really help to understand what was going on. However, after a while it became clear what needed to be done. The resulting C++ code is shown below. The comment sections indicate the additional steps which were necessary.

Code example

#include <iostream>

#include <vector>

#include <memory> /* std::unique_ptr */

#include <limits> /* numeric_limits */

#include <cmath> /* for std::abs(double) */

#include <CCfits/CCfits>

// We do not want X11 dependency

#define cimg_display 0

#include <CImg.h>

using ImageT = cimg_library::CImg<float>;

// https://stackoverflow.com/questions/19837576/comparing-floating-point-number-to-zero

// see Knuth section 4.2.2 pages 217-218

template <typename T>

static bool isAlmostEqual(T x, T y) {

return std::abs(x - y) <= std::numeric_limits<T>::epsilon() * std::abs(x);

}

float calcMaxEntropyThreshold(const ImageT & img) {

size_t numBins = 256;

std::vector<float> hist(numBins, 0);

float max;

float min = img.min_max(max);

std::cout << "numBins=" << numBins << ", min=" << min << ", max=" << max

<< ", img-dim (w x h)=" << img.width() << " x " << img.height() << std::endl;

/**

* IDEA: "Shrink" /map the float image pixel values to 256 possible

* brightness levels (i.e. 256 histogram bins). The reason is that

* the performance of the "max entropy algorithm" strongly depends

* on the histogram size / number of bins. Note that the threshold

* which will be calculated based on this histogram will be the

* correct one for this "shrinked" histogram. In order to get the

* threshold for the initial float image, this transformation needs

* to be reverted later (see comment below).

*/

cimg_forXY(img, x, y) {

int idx = (numBins - 1) * (img(x,y)-min) / (max - min);

++hist[idx];

}

// Normalize histogram (sum of all is 1)

float sum = img.width() * img.height();

std::vector<float> normHist(hist);

for(std::vector<float>::iterator it = normHist.begin(); it != normHist.end(); ++it) {

*it = *it / sum;

}

// Calculate accumulated histograms

std::vector<float> accumulatedHistBlack(numBins, 0);

std::vector<float> accumulatedHistWhite(numBins, 0);

float accumHistSum = 0.0F;

for (size_t idx = 0; idx < numBins; ++idx) {

accumHistSum += normHist[idx];

accumulatedHistBlack[idx] = accumHistSum;

accumulatedHistWhite[idx] = 1.0F - accumHistSum;

}

// Find first index of element not 0 in black distribution

size_t first_bin_idx = 0;

for (size_t idx = 0; idx < numBins; ++idx) {

if ( ! isAlmostEqual(accumulatedHistBlack[idx], 0.0F) ) {

first_bin_idx = idx;

break;

}

}

// Find last index of element not 0 in white distribution

size_t last_bin_idx = numBins;

for (size_t idx = numBins - 1; idx >= first_bin_idx; --idx) {

if ( ! isAlmostEqual(accumulatedHistWhite[idx], 0.0F) ) {

last_bin_idx = idx;

break;

}

}

std::cout << "first_bin_idx: " << first_bin_idx << ", last_bin_idx: " << last_bin_idx << std::endl;

float threshold = 0;

float max_ent = 0;

float ent_back;

float ent_obj;

float tot_ent;

for (size_t idx = first_bin_idx; idx < last_bin_idx; ++idx) {

/* Entropy of the background pixels */

ent_back = 0.0;

for ( size_t ih = 0; ih <= idx; ih++ ) {

if ( ! isAlmostEqual(normHist[ih], 0.0F) ) {

float c = normHist[ih] / accumulatedHistBlack[idx];

ent_back -= c * std::log(c);

}

}

ent_obj = 0.0;

for ( size_t ih = idx + 1; ih < numBins; ih++ ) {

if ( ! isAlmostEqual(normHist[ih], 0.0F) ) {

float c = normHist[ih] / accumulatedHistWhite[idx];

ent_obj -= c * std::log(c);

}

}

/* Total entropy */

tot_ent = ent_back + ent_obj;

if ( max_ent < tot_ent )

{

max_ent = tot_ent;

threshold = idx;

}

}

/**

* IMPORTANT: The histogram was "shrinked" to 256 values, i.e. float

* pixel value range was mapped to 256 brightness values.

* This "shrinking" step needs to be reverted so that the calculated

* threshold matches the original float image.

*/

float th2 = min + (threshold / numBins) * (max - min);

std::cout << "threshold: " << threshold << ", th2: " << th2 << std::endl;

return th2;

}

void readFits(ImageT * outImg, const std::string & inFilename) {

std::unique_ptr <CCfits::FITS> pInfile(new CCfits::FITS(inFilename, CCfits::Read, true));

CCfits::PHDU& image = pInfile->pHDU();

// read all user-specifed, coordinate, and checksum keys in the image

image.readAllKeys();

// Set image dimensions

outImg->resize(image.axis(0) /*x*/, image.axis(1) /*y*/, 1 /*z*/, 1 /*1 color*/);

// At this point the assumption that there is only 1 layer

std::valarray<unsigned long> imgData;

image.read(imgData);

cimg_forXY(*outImg, x, y)

{

(*outImg)(x, outImg->height() - 1 - y) = imgData[outImg->offset(x, y)];

}

}

void writeFits(const ImageT & inImg, const std::string & inFilename) {

long naxis = 2;

long naxes[2] = { inImg.width(), inImg.height() };

std::unique_ptr < CCfits::FITS > pFits;

pFits.reset(

new CCfits::FITS(std::string("!") + inFilename, USHORT_IMG,

naxis, naxes));

// NOTE: At this point we assume that there is only 1 layer.

long nelements = std::accumulate(&naxes[0], &naxes[naxis], 1,

std::multiplies<long>());

std::valarray<int> array(nelements);

cimg_forXY(inImg, x, y)

{

array[inImg.offset(x, y)] = inImg(x, inImg.height() - y - 1);

}

long fpixel(1);

pFits->pHDU().write(fpixel, nelements, array);

}

int main(int argc, char *argv[]) {

ImageT img;

if (argc != 2) {

std::cerr << "Usage: ./max_entropy <my_fits_file.fits>" << std::endl;

return 1;

}

std::string filename = argv[1];

try {

readFits(& img, filename);

float th = calcMaxEntropyThreshold(img);

std::cout << "TH: " << th << std::endl;

// +1 because CImg threshold function somehow uses >=

ImageT binaryImg = img.get_threshold(th + 1);

writeFits(binaryImg, "binary_img.fits");

} catch(CCfits::FitsException & exc) {

std::cerr << "Error loading FITS file: " << exc.message() << std::endl;

return 1;

}

return 0;

}

The following line compiles the code above:

g++ max_entropy.cpp -o max_entropy -lCCfits -lcfitsio -lpthread

The result

The resulting binary can be executed with:

./max_entropy weak_star.fits

The output is the calculated threshold (plus some debug output):

first_bin_idx: 0, last_bin_idx: 254 threshold: 54, th2: 18.8594 TH: 18.8594

I used the following three images as input for this test:

- https://www.lost-infinity.com/wp-content/uploads/no_star.fits

- https://www.lost-infinity.com/wp-content/uploads/weak_star.fits

- https://www.lost-infinity.com/wp-content/uploads/high_contrast_star.fits

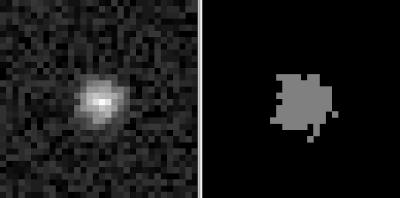

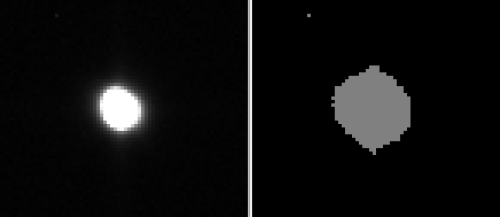

The first image shows the result for a weak star image.

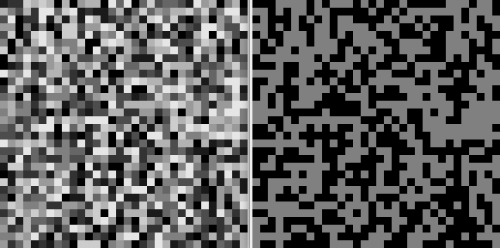

The second image shows the effect of the max entropy threshold algorithm on a noise image. Obviously the max entropy threshold algorithm alone is not enough to decide if there is a star or not.

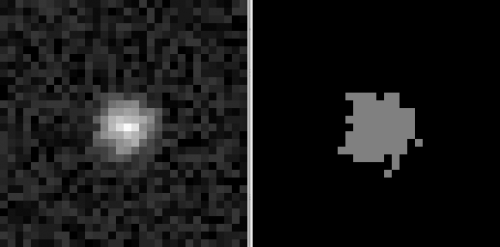

The last image shows a high contrast star image and the corresponding max entropy threshold image. Note the dot in the upper, left corner which also hits the threshold criteria.

Summary

In short, one can see that the algorithm does what it is supposed to do. It works for high contrast stars as well as for weak stars. It also behaves as expected for noise. Looking at the result also means, that the max entropy threshold algorithm alone is not sufficient to decide if there is a star in the area or not. But it can be combined for example with the Signal-to-noise ration (SNR). If for example the SNR is reasonable, the max entropy threshold algorithm can be applied to decide if there are one or more stars in the selected area.

Last updated: June 9, 2022 at 23:41 pm

Hi Carstern,

Great article! Will this program also run for 16 bit png images?

Hi TheProgrammingCurioso,

thanks for your feedback! Out of the box this code unfortunately does not run on 16 bit PNGs but it should be easy to change the code. In fact the library and the code to open the FITS files could even be removed. To open 16 bit PNGs you should be able to use the CImg library directly. I didn’t try it but I guess opening a PNG with CImg should work as described here. You may have to link to libpng additionally. In order to open a 16 bit PNG I guess you need something like this:

CImg image("image.png");Hope that helps!

Best regards

Carsten